What Game Developers Should Know?

Updated: May 2025

WWDC25, Apple’s flagship developer event, unveiled major innovations that will impact mobile app and game developers for years to come. From visionOS upgrades to new Swift APIs and advanced machine learning features, the announcements pave the way for more immersive, performant, and secure apps. This post breaks down the most important takeaways for game studios and mobile developers.

Focus:

Primarily on software announcements, including potential updates to iOS 19, iPadOS, macOS, watchOS, tvOS, and visionOS. To celebrate the start of WWDC, Apple will host an in-person experience on June 9 at Apple Park where developers can watch the Keynote and Platforms State of the Union, meet with Apple experts, and participate in special activities.

What is WWDC:

WWDC, short for Apple Worldwide Developers Conference, is an annual event hosted by Apple. It is primarily aimed at software developers but also draws attention from media, analysts, and tech enthusiasts globally. The event serves as a stage for Apple to introduce new software technologies, tools, and features for developers to incorporate into their apps. The conference also provides a platform for Apple to announce updates to their operating systems, which include iOS, iPadOS, macOS, tvOS, and watchOS.

The primary goals of WWDC are to:

Offer a sneak peek into the future of Apple’s software.

Provide developers with the necessary tools and resources to create innovative apps.

Facilitate networking between developers and Apple engineers.

WWDC 2025 will be an online event, with a special in-person event at Apple Park for selected attendees on the first day of the conference.

What does Apple announce at WWDC

Each year, Apple uses WWDC to reveal important updates for its software platforms. These include major versions of iOS, iPadOS, macOS, watchOS, and tvOS, along with innovations in developer tools and frameworks. Some years may also see the announcement of entirely new product lines or operating systems, such as the launch of visionOS in 2023.

Key areas of announcement include:

iOS: Updates to the iPhone’s operating system, which typically introduce new features, UI enhancements, and privacy improvements.

iPadOS: A version of iOS tailored specifically for iPads, bringing unique features that leverage the tablet’s larger screen.

macOS: The operating system that powers Mac computers, often featuring design changes, performance improvements, and new productivity tools.

watchOS: Updates to the software that powers Apple’s smartwatch line, adding features to health tracking, notifications, and app integrations.

tvOS: Updates to the operating system for Apple TV, often focusing on media consumption and integration with other Apple services.

In addition to operating system updates, Apple also unveils developer tools, such as updates to Xcode (Apple’s development environment), Swift, and other tools that help developers build apps more efficiently.

🚀 Game-Changing VisionOS 2 APIs

Apple doubled down on spatial computing. With visionOS 2, developers now have access to:

- TabletopKit – create 3D object interactions on any flat surface.

- App Intents in Spatial UI – plug app features into system-wide spatial interfaces.

- Updated RealityKit – smoother physics, improved light rendering, and ML-driven occlusion.

🎮 Why It Matters: Game devs can now design interactive tabletop experiences using natural gestures in mixed-reality environments.

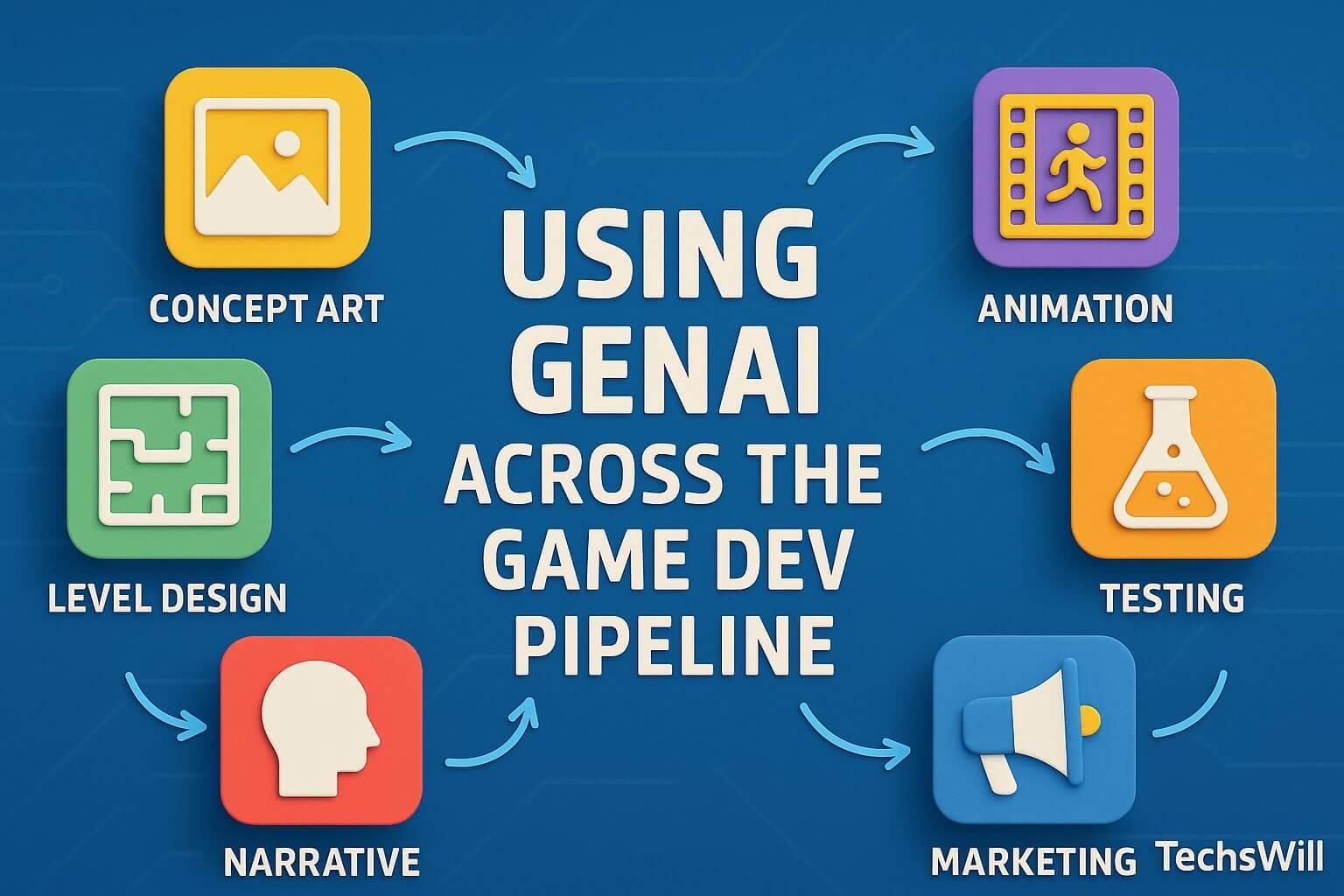

🧠 On-Device AI & ML Boosts

Expected to feature advancements in Apple Intelligence and its integration into apps and services. Access to Apple’s on-device AI models might be a significant announcement for developers. Core ML now supports:

- Transformers out-of-the-box

- Background model loading (no main-thread block)

- Personalized learning without internet access

💡 Use case: On-device AI for NPC dialogue, procedural generation, or adaptive difficulty—all with zero server cost.

🛠️ Swift 6 & SwiftData Enhancements

- Improved concurrency support

- New compile-time safety checks

- Cleaner syntax for

async/await

SwiftData now allows full data modeling in pure Swift syntax—ideal for handling game saves or in-app progression.

📱 UI Updates in SwiftUI

- Flow Layouts for dynamic UI behavior

- Animation Stack Tracing (finally!)

- Enhanced Game Controller API support

These updates make it easier to build flexible HUDs, overlays, and responsive layouts for games and live apps.

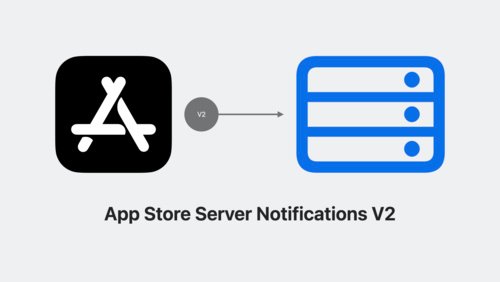

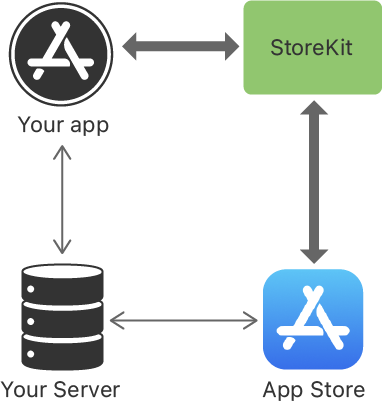

🧩 App Store Changes & App Intents

- Rich push previews with interaction

- Custom product pages can now be A/B tested natively

- App Intents now show up in Spotlight and Shortcuts

📊 Developers should monitor these metrics post-launch for personalized user flows.

Apple WWDC 2025: Date, time, and live streaming details

WWDC 2025 will take place from June 9 to June 13, 2025. While most of the conference will be held online, Apple is planning a limited-attendance event at its headquarters in Cupertino, California, at Apple Park on the first day. This hybrid approach—online sessions alongside an in-person event—has become a trend in recent years, ensuring a global audience can still access the latest news and updates from Apple.

Keynote Schedule (Opening Day – June 9):

Pacific Time (PT): 10:00 AM

Eastern Time (ET): 1:00 PM

India Standard Time (IST): 10:30 PM

Greenwich Mean Time (GMT): 5:00 PM

Gulf Standard Time (GST): 9:00 PM

Where to watch WWDC 2025:

The keynote and subsequent sessions will be available to stream for free via:

- Apple.com

- Apple Developer App

- Apple Developer Website

- Apple TV App

Apple’s Official YouTube Channel

All registered Apple developers will also receive access to technical content and lab sessions through their developer accounts.

How to register and attend WWDC 2025

WWDC 2025 will be free to attend online, and anyone with an internet connection can view the event via Apple’s official website or the Apple Developer app. The keynote address will be broadcast live, followed by a series of technical sessions, hands-on labs, and forums that will be streamed for free.

For developers:

Apple Developer Program members: If you’re a member of the Apple Developer Program, you’ll have access to exclusive sessions and events during WWDC.

Registering for special events: While the majority of WWDC is free online, there may be additional opportunities to register for hands-on labs or specific workshops if you are selected. Details on how to register will be available closer to the event.

Expected product announcements at WWDC 2025

WWDC 2025 will focus primarily on software announcements, but Apple may also showcase updates to its hardware, depending on the timing of product releases. Here are the updates and innovations we expect to see at WWDC 2025:

iOS 19

iOS 19 is expected to bring significant enhancements to iPhones, including:

Enhanced privacy features: More granular control over data sharing.

Improved widgets: Refined widgets with more interactive capabilities.

New AR capabilities: Given the increasing interest in augmented reality, expect Apple to continue developing AR features.

iPadOS 19

With iPadOS, Apple will likely continue to enhance the iPad’s role as a productivity tool. Updates could include:

Multitasking improvements: Expanding on the current Split View and Stage Manager features for a more desktop-like experience.

More advanced Apple Pencil features: Improved drawing, sketching, and note-taking functionalities.

macOS 16

macOS will likely introduce a new version that continues to focus on integration between Apple’s devices, including:

Improved universal control: Expanding the ability to control iPads and Macs seamlessly.

Enhanced native apps: Continuing to refine apps like Safari, Mail, and Finder with better integration with other Apple platforms.

watchOS 12

watchOS 12 will likely focus on new health and fitness features, with:

Sleep and health monitoring enhancements: Providing deeper insights into health data, particularly around sleep tracking.

New workouts and fitness metrics: Additional metrics for athletes, especially those preparing for specific fitness goals.

tvOS 19

tvOS updates may bring more smart home integration, including:

Enhanced Siri integration: Better control over smart home devices via the Apple TV.

New streaming features: Improvements to streaming quality and content discovery.

visionOS 3

visionOS, the software behind the Vision Pro headset, is expected to evolve with new features:

Expanded VR/AR interactions: New immersive apps and enhanced virtual environments.

Productivity and entertainment upgrades: Bringing more tools for working and enjoying content in virtual spaces.

🔗 Further Reading:

✅ Suggested Posts: